From Productivity to Value: The Leadership Shift AI Is Accelerating

- Dr. Stefanie Huber

- 18. Dez. 2025

- 6 Min. Lesezeit

The Harvard Trust Triangle vs. AI: Where Leaders Still Win

AI isn’t just changing workflows. It’s changing identity. And that’s why so many high-performing leaders feel strangely… off right now.

Not because they can’t keep up. Not because they’re not smart enough. But because a question has entered the room that most organizations still refuse to name:

If AI can do more and more of what I used to be valued for… what am I worth now?

I’m seeing this everywhere in my coaching. And I’m seeing it in the work I’m being asked to do right now.

The moments that made it obvious

These days, I’m getting requests from multiple sides to design and facilitate workshops – be it from corporates, consultancies, agencies, in key functions or support functions – that would have sounded like science fiction two years ago:

Redesigning services and role profiles

Mapping what we want humans to own going forward

Defining what we want AI to take over

And deciding what the “new edge” of a role becomes once AI does XYZ

On paper, this looks like strategy. In reality, it’s psychology. Because the moment you ask a team, “What should AI do?” you’re also asking:

“What will you still do?”

“What will you be known for?”

“What will make you valuable?”

And here’s the uncomfortable truth: Most leaders are not afraid of AI. They’re afraid of becoming replaceable. Outdated. Not good enough any longer. Yes, furthering our own inner imposter syndrome. Yay.

I talked about this on a keynote stage — and it’s even more relevant now

Earlier this year, in my keynote at Booking.com, I shared an angle that still feels wildly under-discussed. We keep talking about the edge of AI. But the real leadership question is:

What is the edge of humans? Not machines.

Because right now, many organizations run the entire debate through one narrow lens:

Cognition.

Who thinks faster.

Who produces more.

Who summarizes better.

Who codes quicker.

And yes — if you only look at cognition as “speed + volume,” humans will lose. But that’s not the full picture.

Harvard's Trust Triangle: why the “human edge” is bigger than cognition

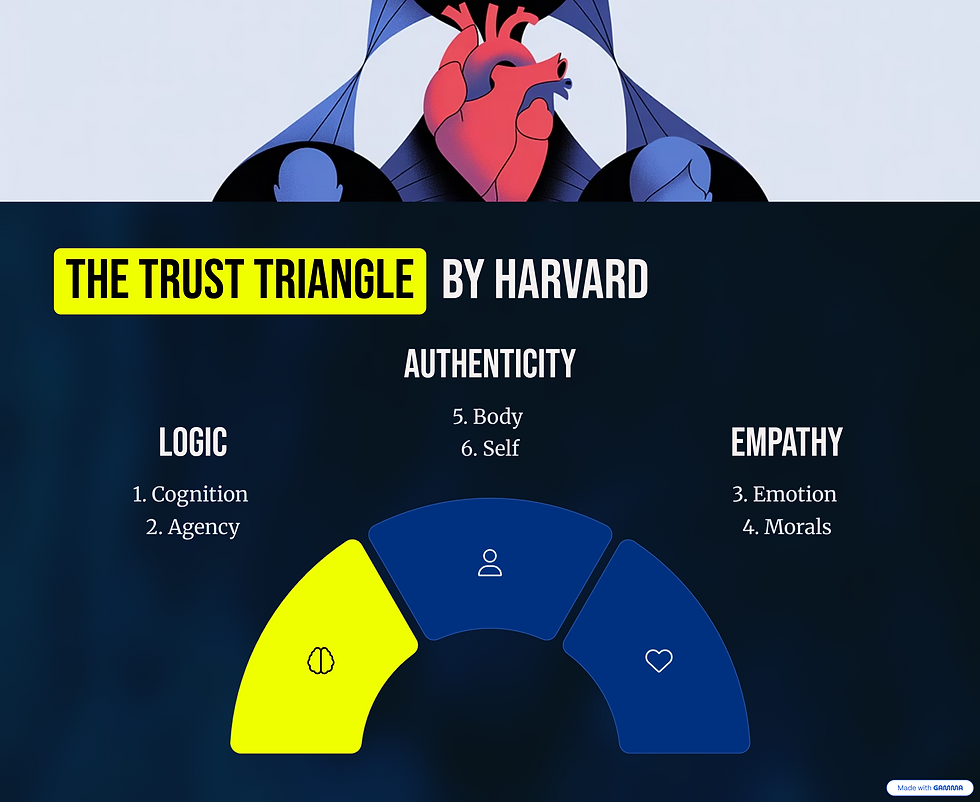

In that Booking.com talk, I used a simple model I love: The Trust Triangle from Harvard.

It’s a reminder that trust — and leadership — doesn’t live in one dimension. And for each of Harvard's three dimensions, I took two exemplary sub dimensions to dig deep into the current psychological research around these topics:

Logic (Cognition, Agency)

Empathy (Emotion, Morals)

Authenticity (Body, Self)

When we reduce the AI conversation to cognition, we accidentally reduce humans to “processors.” And that’s when the quiet anxiety starts. Because if your value is defined as processing… well, let's face it: you will feel replaceable!

Even in cognition, humans still have an edge

Here’s the nuance most people miss:

AI can outperform us in tasks.

But leadership is way more than just a task to be executed.

Even in the “Logic” corner, humans still bring capabilities that matter in today's organizations:

Judgment under ambiguity (when the data is incomplete and the stakes are real)

Context ownership (knowing what matters here, now, with these people)

Problem framing (deciding what the real question is — not just answering it)

Trade-offs and accountability (making a call and owning consequences)

Strategic prioritization (saying no, protecting focus, timing decisions)

If you’ve ever led through a reorg, a crisis, or a high-stakes launch, you know this: The hard part wasn’t “thinking.” The hard part was choosing.

And even more impact lives in the other two dimensions

Where humans create disproportionate value — especially in times of AI transformation — is in the dimensions we keep ignoring.

Empathy (Emotion + Morals)

Reading the room when people don’t feel safe to speak

Naming what’s unspoken without escalating it

Holding ethical lines when “efficiency” becomes the only metric

Making decisions that people can live with — not just decisions that look good on a slide

Authenticity (Body + Self)

This is the one leaders underestimate most. Because your nervous system is contagious.

People feel your regulation before they hear your strategy

Your presence sets the emotional temperature of the room

Your integrity (self-alignment) determines whether people trust your words

This is why the “human edge” isn’t a motivational poster. It’s a leadership operating system.

The strategic shift: 4 lenses to redesign your role in an AI world as a value function, and not a process-driven task

If you want to feel (and be) valuable in an AI-shaped organization, don’t start by asking:

“What can AI do?”

Start here:

“How do I understand my role — and where does my human edge actually create value?”

Most leaders still describe their job as a list of tasks. But process-driven tasks are exactly what AI will keep eating. So here are four strategic lenses you can use to rethink your role. Not as a title. As a value function.

Lens 1: Your role as Decision Architecture

In complex organizations, the bottleneck is rarely information. It’s decisions. Your value isn’t that you “know a lot.”

Your value is that you help the system make better decisions:

Who decides what (and who shouldn’t)

What inputs matter (and what noise to ignore)

How fast decisions need to be made (and where slowness is wisdom)

How trade-offs are made explicit

Reflection prompts:

Where are decisions currently stuck — and why?

What decision do people keep escalating to me that I should redesign away?

Where do we confuse “more data” with “more clarity”?

Lens 2: Your role as Meaning-Making

AI can generate answers. But it cannot tell your organization what those answers mean.

You as a leader create meaning:

What matters this quarter (and what doesn’t)

What we stand for when it’s inconvenient

What “good” looks like now that the tools changed

What story we’re living inside of

Reflection prompts:

What story is my team currently telling itself about AI?

What story do I want them to live by instead?

Where do I need to say the quiet part out loud?

Lens 3: Your role as Trust Infrastructure

This is where the Trust Triangle becomes operational. In AI transformations, trust breaks in predictable places:

Logic: “Do we have a plan? Is this coherent?”

Empathy: “Do you see what this is doing to people?”

Authenticity: “Do you actually believe what you’re saying?”

Your role is to build trust across all three.

Reflection prompts:

Which corner of the triangle is weakest in my leadership right now?

Where do people not trust the plan, the care, or the integrity?

What conversation would increase trust the fastest — if I had the courage to have it?

Lens 4: Your role as a Human Edge Portfolio

In an AI world, your value compounds when you deliberately invest in the few human capabilities that scale through others.

Think of it as your “superpower stack.”

Examples:

Judgment under ambiguity

Ethical courage

Conflict navigation

Taste and strategic intuition

Presence and nervous system leadership

Building psychological safety without lowering standards

Reflection prompts:

What are my 2–3 human superpowers — the ones people come to me for?

Where in my calendar do they show up?

What would I stop doing if I fully trusted this is my edge?

Here’s what I suggest you do this month

Take 15 minutes. No laptop. No AI. Write down:

Which of the 4 lenses do I currently lead from — by default?

Which lens is underdeveloped, but would matter most in 2026?

One change I’ll make in January to invest in my human edge (calendar, conversations, decisions).

If these reflections are valuable for you, I recommend my yesterday's post to reflect on your leadership learnings for this year: Forget ChatGPT prompts for the rest of the year.

What’s else is up

The Youtube video that shocked me the most this month is the current state of research regarding: AI-induced psychosis hitting people who have not (!) been struggling with mental health issues before. Please watch this.

The most important article I have written this year together with Elisa Schön is called: You’re Leaving Millions on the Table: The Economics of Emotion. If you haven't read it, it might be the time now – Thomas Lindner, CEO of Innowerft, has called it a "sharp, illuminating, and inspiring contribution".

I have written a detailed blog post about "Time management and self-organization: Strategies for more clarity and structure", in case you are interested in these topics.

Another viral posts: My biggest failures over the last 6 years – enjoy!

Completely off topic, but for me likewise important: I have just released my first debut album "The Fragile Order" – Synth pop, made in Berlin. Listen in here if you like!

If this resonates

If you’ve felt that quiet question — “Am I still valuable?” — you’re not alone. And you’re not weak. You’re human. A good one! We need you on this planet! If you have better understood what your edge is after reading this, comment one word: EDGE.

And if you’re ready to move from survival mode back into clarity and power, feel free to reach out to me via stef@stefhuber.com.

— Stef